AI Control Panel: Human-on-the-Loop (HOTL) and Human-in-the-Loop (HITL) Explained

Learn About AI Control Panel: Human-on-the-Loop (HOTL) and Human-in-the-Loop (HITL) Explained.

Unpacking the critical distinctions and synergies between human involvement in AI.

This document details the concepts of Human-in-the-Loop (HITL) and Human-on-the-Loop (HOTL) AI, their distinctions, applications, future trends, and challenges.

The difference between these two concepts lies in active permission versus supervisory intervention.

- Human-in-the-Loop (HITL): The human is a required bottleneck. The AI system performs a task, but it cannot finalize the action or move to the next step without direct human approval or input. The human is an active participant in the workflow.

- Human-on-the-Loop (HOTL): The human is a safety net. The AI system operates autonomously and executes actions independently. The human monitors the system in real-time and only steps in to interrupt or override the AI if it makes a mistake or encounters a situation it cannot handle.

The Student Driver

Imagine a teenager learning to drive a car with their parent in the passenger seat.

The “In-the-Loop” Parent (Active Coach) The teenager (the AI) is sitting in the driver’s seat, but the parent (the Human) has a set of dual-control pedals.

Every time the teenager wants to make a turn or merge lanes, they ask, “Can I go now?” The parent checks the road and says, “Yes, go,” or “No, wait.” The car does not move through the intersection until the parent explicitly gives the command.

- If the parent falls asleep, the car stops moving entirely.

The “On-the-Loop” Parent (Nervous Passenger) The teenager has now become a decent driver. They are driving the car continuously without asking for permission.

The parent is sitting in the passenger seat, perhaps looking at their phone or eating a snack, but is still paying peripheral attention to the road.

They do not approve every turn.

However, if the teenager starts drifting into the wrong lane or fails to see a red light, the parent immediately grabs the steering wheel or yanks the handbrake to correct the error.

- If the parent falls asleep, the car keeps driving—potentially safely, potentially into a ditch.

I. The Smart-Garden Protocol

In the year 2045, Silas was the head groundskeeper for the Neo-Tokyo Botanical Dome, a massive indoor rainforest managed by a robotic swarm.

Phase 1: In-the-Loop

In the early days, the bots were clumsy. Silas operated the system using Human-in-the-Loop protocols.

A drone would hover over a patch of rare orchids, identify a withered leaf, and send a ping to Silas’s tablet: “Requesting permission to prune Leaf #402.”

Silas would look at the image on his screen, confirm it was indeed a dead leaf and not a resting butterfly, and tap [APPROVE]. The drone would then snip the leaf. Ten seconds later: “Requesting permission to water Soil Patch #89.”

Silas would check the moisture levels and tap [APPROVE]. The work was slow. Silas was exhausted. He was the brain; the bots were just the hands. Nothing happened without him.

Phase 2: On-the-Loop

Five years later, the software was upgraded to Human-on-the-Loop. Silas now sat in the control tower with his feet up, drinking coffee.

Below him, thousands of drones zipped through the air, pruning, watering, and fertilizing millions of plants autonomously. He didn’t tap “Approve” anymore. The drones made their own decisions based on the data.

Silas watched a giant dashboard of flowing diagnostics. Suddenly, a red light flashed on his monitor.

A drone had identified a visitor’s bright red hat as a “diseased hibiscus flower” and was preparing to prune it. Silas sat up and smashed the [OVERRIDE] button.

The drone froze inches from the visitor’s head and retreated. “Close one,” Silas muttered. Under this system, the work happened at lightning speed.

Silas was no longer the engine; he was the emergency brake.

- HITL (Phase 1): Silas had to say “Yes” for the action to happen. (High control, low speed).

- HOTL (Phase 2): Silas had to say “No” to stop the action. (High speed, supervisory control).

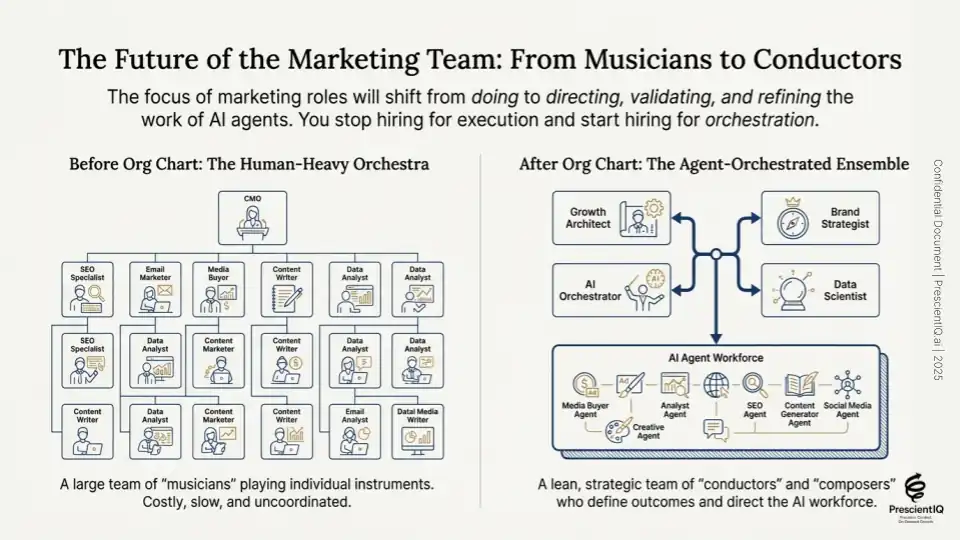

What AI and agents mean for marketing teams in the financial industry is amazing.

II. Understanding HITL and Human-on-the-Loop AI

A. Human-in-the-Loop (HITL) AI

HITL AI integrates human intelligence directly into the AI’s workflow, fostering a collaborative learning environment.

- Direct Human Involvement: Humans actively label data, validate results, provide feedback, and correct errors.

- Continuous Engagement: Human approval is often required before AI outputs reach end-users.

- High-Stakes & Nuance: Essential for tasks requiring ethical considerations, legal compliance, or handling complex edge cases.

- Entity Definitions: Artificial Intelligence (AI): Machines capable of human-like learning and problem-solving.

- Machine Learning (ML): A subset of AI where systems learn from data without explicit programming.

- Data Annotation: The process of labeling data to make it understandable for AI.

- Algorithmic Bias: Unfair outcomes in AI due to biased training data.

B. Human-on-the-Loop (HOTL) AI

Human-on-the-Loop AI positions humans as supervisors who monitor AI operations and intervene only when issues arise or difficult judgments are needed.

- Supervisory Role: Humans primarily observe the AI’s performance, allowing it to operate autonomously.

- Intervention as Needed: Human involvement is triggered by AI errors or complex decision points.

- Autonomy with Oversight: The AI functions independently but can be controlled or corrected by humans.

- Efficiency & Scalability: Suitable for repetitive tasks where speed is critical and a limited number of errors are acceptable.

- Entity Definitions:

- Autonomous Systems: Systems capable of operating without constant human intervention.

- Edge Cases: Rare or unusual situations not covered in the AI’s training data.

III. Divergence Between HITL and HOTL

The primary differences lie in the degree of AI autonomy and the timing of human intervention.

| Feature | Human in the Loop (HITL) | Human on the Loop (HOTL) |

| Human Involvement | Constant, integral part of the process. | Supervisory, intervening only when necessary. |

| Timing of Intervention | Before final results are generated. | After the results are produced, they are subject to correction or oversight. |

| Nature of Decisions | Humans directly make or approve decisions. | Humans oversee AI decisions and rectify problems. |

| Error Tolerance | Very low; high accuracy is paramount. | Higher; some errors are permissible. |

| Goal | Accuracy, reliability, ethics, and continuous learning. | Speed, efficiency, scalability with human supervision. |

| Risk Profile | Best for sensitive, high-stakes tasks. | Best for repetitive, scalable, lower-risk tasks. |

B. CEO’s Guide to Choosing Between HITL and HOTL

The decision between Human-in-the-Loop (HITL) and Human-on-the-Loop (HOTL) is a strategic one, often driven by the organization’s risk appetite, the nature of the task, and its financial objectives.

A CEO must weigh control against speed and cost against error tolerance.

1. Strategic Decision Matrix

A CEO can utilize the following framework to guide the choice:

| Strategic Pillar | Choose HITL When… | Choose HOTL When… |

|---|---|---|

| Risk Tolerance | The cost of error is catastrophic (e.g., patient death, massive financial loss, legal liability). | The cost of minor error is acceptable or recoverable (e.g., a wrong content tag, a slightly off inventory count). |

| Required Accuracy | Accuracy must be near 100%; human context, ethics, or nuanced judgment is non-negotiable. | Speed and scale are more important than absolute perfection (e.g., high-volume transaction processing). |

| Regulatory & Ethical Impact | The task is subject to strict regulatory oversight (e.g., EU AI Act high-risk category) or involves profound ethical judgment. | The task falls into a lower-risk category where existing legal frameworks are sufficient for oversight. |

| System Maturity | The AI model is new, complex, or still in the training/validation phase and requires continuous human feedback. | The AI model is highly mature, proven in production, and handles the vast majority of cases reliably. |

| Task Complexity | The task involves frequent “edge cases,” ambiguous data, or requires creative problem-solving (e.g., content creation, strategic planning). | The task is highly repetitive, clearly defined, and involves standardized data inputs (e.g., data entry, basic security monitoring). |

2. Phased Implementation Approach

A common and often recommended strategy for a CEO is to implement a phased approach that mirrors the “Student Driver” analogy:

- Phase 1: HITL for Training and Validation (The Coach)

- Goal: Build the AI model, establish trust, and ensure data quality.

- Action: Start with 100% HITL to actively label data, validate initial outputs, and refine the core algorithm. This is an investment in accuracy.

- Phase 2: Hybrid Transition (The Co-Pilot)

- Goal: Increase efficiency while maintaining quality.

- Action: Transition to a system where the AI handles low-risk, high-confidence tasks independently, while routing high-risk or low-confidence decisions to a human for approval (HITL).

- Phase 3: HOTL for Scale (The Supervisor)

- Goal: Maximize speed and scalability.

- Action: Once the AI demonstrates consistent reliability (e.g., 99.5% accuracy), switch to HOTL, where human intervention is reserved only for system alerts, errors, or high-stakes overrides. The human role shifts from active participation to vigilance and risk management.

The selection depends on risk assessment, required accuracy, decision complexity, and scalability needs.

- HITL is essential for critical applications such as medical diagnosis.

- HOTL is suitable for high-volume, simpler tasks where the AI is generally reliable.

- The HITL market is projected to grow significantly, reaching $17.6 billion by 2033 from $3.2 billion in 2024, a 20.8% compound annual growth rate, indicating the increasing recognition of the importance of human oversight.

IV. Real-World Applications of HITL and HOTL

A. HITL AI Applications

HITL AI is transforming industries where accuracy, ethical judgment, and the cost of errors are high.

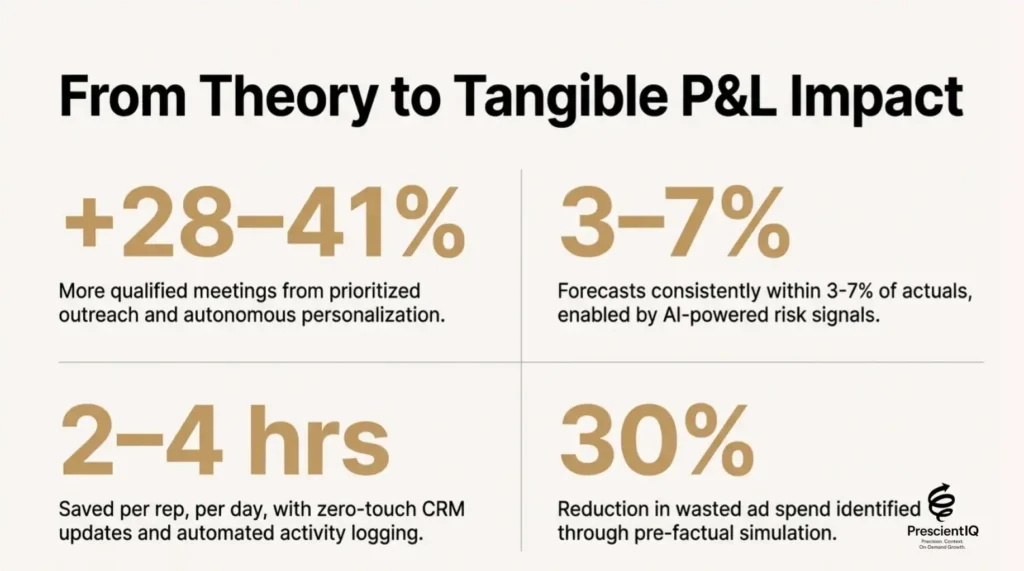

- Healthcare: AI assists in identifying potential issues, with doctors making final treatment decisions, improving accuracy by 30% in complex cases.

- Fraud Detection: AI flags suspicious activities, but humans make the final determination to ensure legal compliance and prevent financial losses.

- Content Moderation: AI identifies inappropriate content, while humans provide contextual understanding for decision-making.

- HITL can reduce model training time by up to 25%.

C. HOTL AI Applications in Marketing: A CEO’s Perspective

HOTL (Human-on-the-Loop) AI offers marketing executives a powerful tool to achieve massive scale and hyper-speed personalization without sacrificing quality control.

The human, in this context, moves from a bottleneck in content approval to an editor focused on brand safety, strategic integrity, and ethical messaging.

1. Real-Time Bid Optimization and Ad Serving

In programmatic advertising, decision-making speed is critical.

- AI Function: An AI system autonomously adjusts bidding strategies, optimizes ad placements, and allocates budget across thousands of campaigns in milliseconds based on real-time performance data (e.g., click-through rates, conversion rates).

- Human Oversight (HOTL): A marketing analyst monitors a dashboard for major anomalies (e.g., a campaign unexpectedly draining budget, an algorithm mistakenly bidding on irrelevant high-cost inventory). The human sets the overall strategic guardrails and only intervenes with an immediate override if a catastrophic budget overspend or brand safety violation is detected.

- CEO Benefit: Maximum return on ad spend (ROAS) and unparalleled market responsiveness without the need for 24/7 manual optimization.

2. Dynamic Content Personalization at Scale

Modern customer journeys require content that adapts instantly to the user’s context.

- AI Function: AI generates thousands of personalized subject lines, email body variants, or website copy adjustments for different customer segments (e.g., based on different pain points or calls to action). It deploys these variations instantly.

- Human Oversight (HOTL): The brand manager periodically reviews the AI-generated content based on flags (e.g., A/B test results showing a particularly unusual or underperforming variant). They intervene only to “blacklist” specific phrases, correct factual errors in the content pool, or re-inject brand tone if the AI drifts too far from the core message.

- CEO Benefit: Hyper-personalization for millions of customers simultaneously, leading to higher engagement and conversion rates, while maintaining strict brand consistency.

3. Automated Social Media Crisis Monitoring

Protecting brand reputation requires immediate, scalable monitoring.

- AI Function: The AI continuously monitors billions of social media posts, news articles, and forums, autonomously flagging mentions that exceed a predefined threshold for negative sentiment or volume. For common, low-risk negative comments, the AI may auto-generate and post a templated, empathetic response.

- Human Oversight (HOTL): A public relations specialist only receives alerts when a high-severity crisis is identified (e.g., a major media outlet report, a significant jump in coordinated negative posts). The human intervenes to take manual control, draft a strategic response, or initiate a full incident response plan.

- CEO Benefit: Instantaneous detection of potential reputational threats and rapid response, minimizing damage and ensuring the human crisis team is only focused on true emergencies.

| HOTL Marketing Application | Key AI Benefit (Speed & Scale) | Key Human Oversight (Control & Safety) | CEO Value Proposition |

|---|---|---|---|

| Programmatic Advertising | Real-time budget allocation. | Override for budget overspend/errors. | Maximized ROAS. |

| Content Personalization | Instant variant deployment. | Curation of brand tone and message. | Increased engagement and conversion. |

| Crisis Monitoring | 24/7 high-volume social listening. | Intervention on high-severity threats. | Minimized brand reputation risk. |

HOTL AI excels in scenarios that demand speed and scale, with humans serving as a safety net.

- Financial Trading: Analysts monitor AI trading systems and can override trades deemed risky, reducing false positives by 15-20%.

- Autonomous Vehicles & Drones: Humans supervise operations from control centers and can take manual control if necessary.

- Industrial Automation & Cybersecurity: AI monitors for threats, and humans review alerts.

- Approximately 70% of companies using AI report that human oversight enhances their decision-making.

| Industry | HITL Use Cases | HOTL Use Cases |

| Healthcare | Diagnosis review, treatment planning. | Remote patient monitoring, automated drug dispensing. |

| Finance | Fraud case adjudication, credit approval. | High-speed trading oversight, anomaly detection. |

| Autonomous Vehicles | Labeling complex scenarios, manual takeover. | Self-driving operation monitoring, manual override. |

| Content Moderation | Contextual review of flagged content. | Automated filtering of obvious violations. |

| Manufacturing | Complex defect quality checks, real-time adjustments. | Industrial robot monitoring, production anomaly detection. |

| Cybersecurity | Complex threat investigation, incident response. | Automated threat flagging, network monitoring. |

V. Future Trends and Innovations in Human-AI Collaboration

A. Key Trends Shaping HITL and HOTL

The future emphasizes AI as a human augmentation tool, driven by several factors:

- Regulations: Expect regulations requiring human oversight for AI in critical areas by 2026-2030, promoting accountability and transparency.

- Evolving Human Roles: Humans will transition from data labeling to roles like AI coaches and risk managers, focusing on explaining AI decisions.

- Hybrid AI Systems: Integrated systems combining human judgment with AI capabilities will become standard.

- Explainable AI (XAI): By 2030, AI tools will likely include “explanation interfaces” to clarify AI decision-making processes.

B. Innovations Driving Human-AI Partnerships

Technological advancements are enhancing the synergy between humans and AI:

- AI with Emotional Intelligence: AI can now detect team morale and burnout, improving collaboration.

- Personalized AI: AI systems adapt to individual work styles and offer proactive suggestions.

- Generative AI: Assists in content creation, coding, report drafting, and brainstorming.

- VR/AR Integration: Immersive collaboration environments with AI-powered avatars and real-time translation.

- Reinforced Learning with Human Feedback (RLHF): Human input directly shapes AI learning processes.

VI. Challenges and Considerations in Implementing HITL and HOTL

A. Common Hurdles in Integrating Humans into AI Systems

- Scalability and Cost: Human involvement can slow processes and incur high costs for hiring, training, and management.

- Reliance on Human Expertise: System operations can be disrupted by the unavailability of key personnel.

- Consistency of Human Feedback: Maintaining uniformity across a large team’s feedback can be challenging due to individual biases.

B. Overcoming Automation Complacency and Bias

- Automation Complacency: To combat over-reliance on AI, clearly define human roles, provide sufficient data for informed decisions, empower override capabilities, and conduct regular training emphasizing critical evaluation.

- Bias Mitigation: While HITL aims to reduce algorithmic bias, human reviewers can introduce their own biases. Ensure diverse review teams and robust data governance.

- Data Privacy and Security: Increased human involvement necessitates stringent data protection measures.

- Humans are integral to hybrid intelligence systems.

| Challenge Area | Human-in-the-Loop (HITL) Impact | Human-on-the-Loop (HOTL) Impact |

| Scalability & Cost | Potential bottlenecks and high costs for specialized experts. | Supervisors must remain vigilant; costly interventions are possible. |

| Human Reliability | Inconsistent feedback, potential for human error. | Risk of complacency, slower reaction times. |

| Integration Complexity | Requires intricate feedback loops. | Needs clear monitoring and override mechanisms. |

| Data Privacy/Security | Increased exposure of sensitive data. | Secure oversight of large datasets is crucial. |

| Defining Roles | Ensuring genuine human engagement. | Establishing clear intervention protocols. |

VII. Strategic Imperatives for Human-AI Synergy

A. Designing for Effective Human-AI Collaboration

Organizations should design AI systems that augment human capabilities.

- Establish clear points for human intervention and feedback mechanisms to improve AI.

- Provide comprehensive training for personnel on data, AI, and hybrid roles.

- Prioritize Explainable AI (XAI) to build trust and enable ethical AI use.

B. The Vision of an Intelligent Future with HITL and HOTL

A truly intelligent future will be characterized by augmented intelligence, where humans contribute creativity and empathy, and AI provides data processing and consistency.

- AI will continuously learn from human interactions.

- Human roles will shift towards AI oversight.

- The goal is to balance automation with ethics and human values.

- Over 85% of AI failures are attributed to a lack of human oversight, highlighting the importance of synergy.

- Future systems may include Human-Out-Of-The-Loop (HOOTL) for highly reliable AI, with the ability to revert to HOTL or Human-in-Command (HIC) for ethical control.

In finance, the distinction is often between risk mitigation (HITL) and market speed (HOTL).

Comparison: HITL vs. HOTL in Finance

| Feature | Human-in-the-Loop (HITL) | Human-on-the-Loop (HOTL) |

| Role of Human | The Gatekeeper: Approves every transaction or decision before execution. | The Supervisor: Monitors the stream of autonomous decisions and intervenes only for errors. |

| Primary Pro | High Accuracy & Trust: Essential for high-stakes decisions where an error could ruin a reputation or violate laws. | Speed & Scalability: Can process millions of transactions per second; essential for arbitrage and real-time markets. |

| Secondary Pro | Regulatory Compliance: Easier to explain to auditors because a human signed off on the specific decision. | Efficiency: Frees up human analysts to focus on complex strategy rather than routine processing. |

| Primary Con | Bottlenecking: The process moves only as fast as the human can read. It cannot keep up with real-time market fluctuations. | Runaway Risk: If the algorithm has a bug (e.g., a “flash crash”), it can lose millions in seconds before the human can hit the kill switch. |

| Secondary Con | Cost & Fatigue: expensive to employ humans for volume tasks; humans get tired and make errors after hours of reviewing data. | Runaway Risk: If the algorithm has a bug (e.g., a “flash crash”), it can lose millions of dollars in seconds before a human can hit the kill switch. |

VII. Conclusion: Achieving Intelligent Synergy

Key Takeaways

- Human-in-the-Loop (HITL): Humans are directly involved in the AI process, crucial for complex decisions and AI improvement.

- Human-on-the-Loop (HOTL): Humans supervise AI and intervene when necessary, prioritizing speed.

- Both HITL and HOTL enhance AI’s intelligence, ethics, and dependability by keeping it on track.

- The choice between HITL and HOTL depends on risk tolerance, task complexity, and desired control.

- Future trends include mixed systems, regulatory frameworks for human oversight, and the development of explainable AI.

The journey from Human-in-the-Loop (HITL) to Human-on-the-Loop (HOTL) represents the strategic evolution of AI maturity.

While HITL provides the necessary human judgment, ethical grounding, and high-fidelity data labeling required to build reliable models, HOTL enables organizations to unlock unparalleled speed and scale.

The choice between them is not absolute but dynamic, resting on a careful assessment of risk tolerance, required accuracy, and the specific phase of the AI lifecycle.

Ultimately, both approaches are indispensable, ensuring that AI systems remain accountable, trustworthy, and aligned with human values as they move from the training environment to autonomous operation.

Achieving true synergy means designing systems that leverage the unique strengths of both parties.

AI excels at processing vast data volumes, ensuring consistency, and operating at high velocity; humans provide the critical non-algorithmic intelligence—context, empathy, ethical reasoning, and domain expertise—necessary to navigate “edge cases” and high-stakes decisions.

The increasing adoption of hybrid models and the push for Explainable AI (XAI) underscore a future where human judgment is not replaced but augmented. This partnership moves beyond simple efficiency to create a robust, resilient, and more intelligent organizational infrastructure.

As AI permeates more critical sectors, the strategic imperative for CEOs and leaders is to focus less on full automation and more on defining the perfect ‘loop.’

By establishing clear intervention protocols, investing in vigilance training to combat automation complacency, and continuously refining the feedback mechanisms between human and machine, organizations can responsibly harness the power of AI.

The future of AI is not a fully automated world, but an Augmented Intelligence ecosystem where human oversight—whether active (HITL) or supervisory (HOTL)—remains the final guarantor of safety, compliance, and ethical performance.